Researchers from Caltech and the University of Southern California (USC) report the first application of quantum computing to a physics problem. By employing quantum-compatible machine learning techniques, they developed a method of extracting a rare Higgs boson signal from copious noise data. Higgs is the particle that was predicted to imbue elementary particles with mass and was discovered at the Large Hadron Collider in 2012. The new quantum machine learning method is found to perform well even with small datasets, unlike the standard counterparts.

Despite the central role of physics in quantum computing, until now, no problem of interest for physics researchers has been resolved by quantum computing techniques. In this new work, the researchers successfully extracted meaningful information about Higgs particles by programming a quantum annealer—a type of quantum computer capable of only running optimization tasks—to sort through particle-measurement data littered with errors. Caltech's Maria Spiropulu, the Shang-Yi Ch'en Professor of Physics, conceived the project and collaborated with Daniel Lidar, pioneer of the quantum machine learning methodology and Viterbi Professor of Engineering at USC who is also a Distinguished Moore Scholar in Caltech's divisions of Physics, Mathematics and Astronomy and Engineering and Applied Science.

The quantum program seeks patterns within a dataset to tell meaningful data from junk. It is expected to be useful for problems beyond high-energy physics. The details of the program as well as comparisons to existing techniques are detailed in a paper published on October 19 in the journal Nature.

A popular computing technique for classifying data is the neural network method, known for its efficiency in extracting obscure patterns within a dataset. The patterns identified by neural networks are difficult to interpret, as the classification process does not reveal how they were discovered. Techniques that lead to better interpretability are often more error prone and less efficient.

"Some people in high-energy physics are getting ahead of themselves about neural nets, but neural nets aren't easily interpretable to a physicist," says USC's physics graduate student Joshua Job, co-author of the paper and guest student at Caltech. The new quantum program is "a simple machine learning model that achieves a result comparable to more complicated models without losing robustness or interpretability," says Job.

With prior techniques, the accuracy of classification depends strongly on the size and quality of a training set, which is a manually sorted portion of the dataset. This is problematic for high-energy physics research, which revolves around rare events buried in large amount of noise data. "The Large Hadron Collider generates a huge number of events, and the particle physicists have to look at small packets of data to figure out which are interesting," says Job. The new quantum program "is simpler, takes very little training data, and could even be faster. We obtained that by including the excited states," says Spiropulu.

Excited states of a quantum system have excess energy that contributes to errors in the output. "Surprisingly, it was actually advantageous to use the excited states, the suboptimal solutions," says Lidar.

"Why exactly that's the case, we can only speculate. But one reason might be that the real problem we have to solve is not precisely representable on the quantum annealer. Because of that, suboptimal solutions might be closer to the truth," says Lidar.

Modeling the problem in a way that a quantum annealer can understand proved to be a substantial challenge that was successfully tackled by Spiropulu's former graduate student at Caltech, Alex Mott (PhD '15), who is now at DeepMind. "Programming quantum computers is fundamentally different from programming classical computers. It's like coding bits directly. The entire problem has to be encoded at once, and then it runs just once as programmed," says Mott.

Despite the improvements, the researchers do not assert that quantum annealers are superior. The ones currently available are simply "not big enough to even encode physics problems difficult enough to demonstrate any advantage," says Spiropulu.

"It's because we're comparing a thousand qubits—quantum bits of information—to a billion transistors," says Jean-Roch Vlimant, a postdoctoral scholar in high energy physics at Caltech. "The complexity of simulated annealing will explode at some point, and we hope that quantum annealing will also offer speedup," says Vlimant.

The researchers are actively seeking further applications of the new quantum-annealing classification technique. "We were able to demonstrate a very similar result in a completely different application domain by applying the same methodology to a problem in computational biology," says Lidar. "There is another project on particle-tracking improvements using such methods, and we're looking for new ways to examine charged particles," says Vlimant.

"The result of this work is a physics-based approach to machine learning that could benefit a broad spectrum of science and other applications," says Spiropulu. "There is a lot of exciting work and discoveries to be made in this emergent cross-disciplinary arena of science and technology, she concludes.

This project was supported by the United States Department of Energy, Office of High Energy Physics, Research Technology, Computational HEP; and Fermi National Accelerator Laboratory as well as the National Science Foundation. The work was also supported by the AT&T Foundry Innovation Centers through INQNET (INtelligent Quantum NEtworks and Technologies), a program for accelerating quantum technologies.

Written by Mark H. Kim

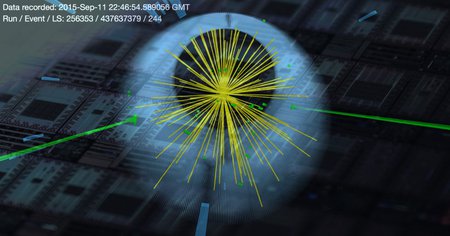

Higgs "di-photon" event candidate from Large Hadron Collider data collisions overlaid with a schematic of a wafer of quantum processors.

Credit: LHC Image: CERN/CMS Experiment; Composite: M. Spiropulu (Caltech)

Higgs "di-photon" event candidate from Large Hadron Collider data collisions overlaid with a schematic of a wafer of quantum processors.

Credit: LHC Image: CERN/CMS Experiment; Composite: M. Spiropulu (Caltech)